My experience at AWS summit London 2025!

Introduction:

AWS Summit London is a free cloud event at ExCeL London. Starting from 8:00 am you could grab a schedule, pick up your badge and wander an expo floor divided into zones like AI/ML, databases, security, networking, and serverless.

The day kicks off with a one-hour keynote featuring new AWS services and customer success stories, then you choose from sessions, chalk talks, workshops, or live demos.

The expo had partner and startup booths where you can try out data pipeline frameworks, see how companies are using their own services, edge compute appliances and more. Some engineers and founders are at hand to run live demos and dive into how their products solve real problems.

AWS experts and partners are everywhere too, ready to answer questions.

My highlights of the day:

I’ll give a breakdown of some of my favourite highlights. Some may be very technical but I hope to break it down so you understand each aspect and how AWS uses their services.

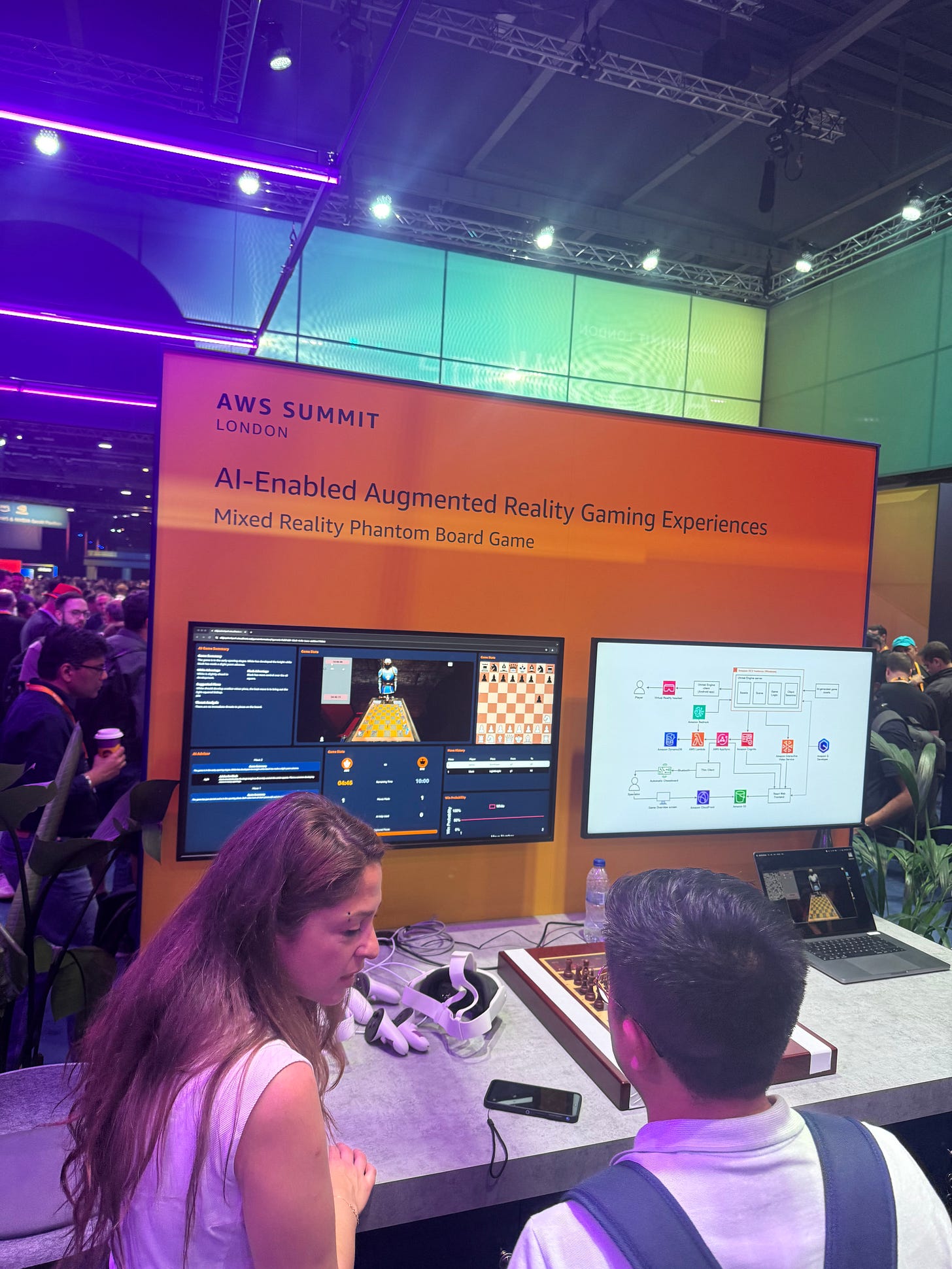

Augmented reality gaming experience:

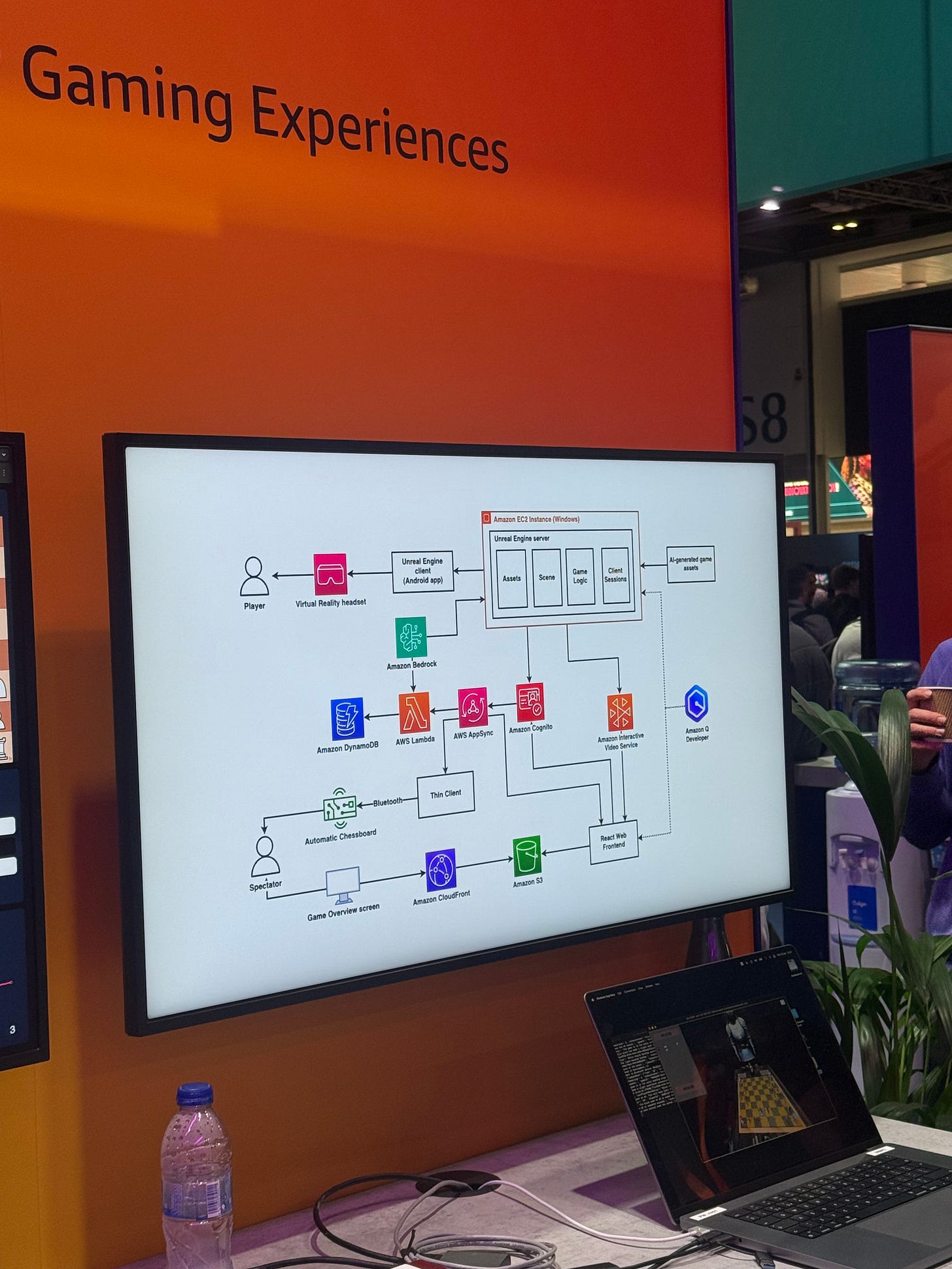

This demo turned a regular tabletop into an AR chess game using a full AWS stack. Amazon Bedrock generated AI-driven chess opponents and move suggestions on the go. Every piece’s position and player stats were stored in DynamoDB, so the game state stayed consistent across sessions. Lambda functions ran the game logic, validating moves, checking for checkmate and updating scores!

AppSync handled real-time syncing, so both players saw each other’s moves instantly. Amazon Cognito managed user sign-in and authentication, keeping games private and secure.

All the 3D models and textures sat in S3 and were delivered globally through CloudFront, so assets loaded fast with minimal lag. It was a slick proof of how you can blend physical boards with cloud-powered AR to create a seamless, interactive experience. The image below shows the architecture of this game! Yes I know, how cool!!

Hosting DeepSeek-R1 on Amazon EKS

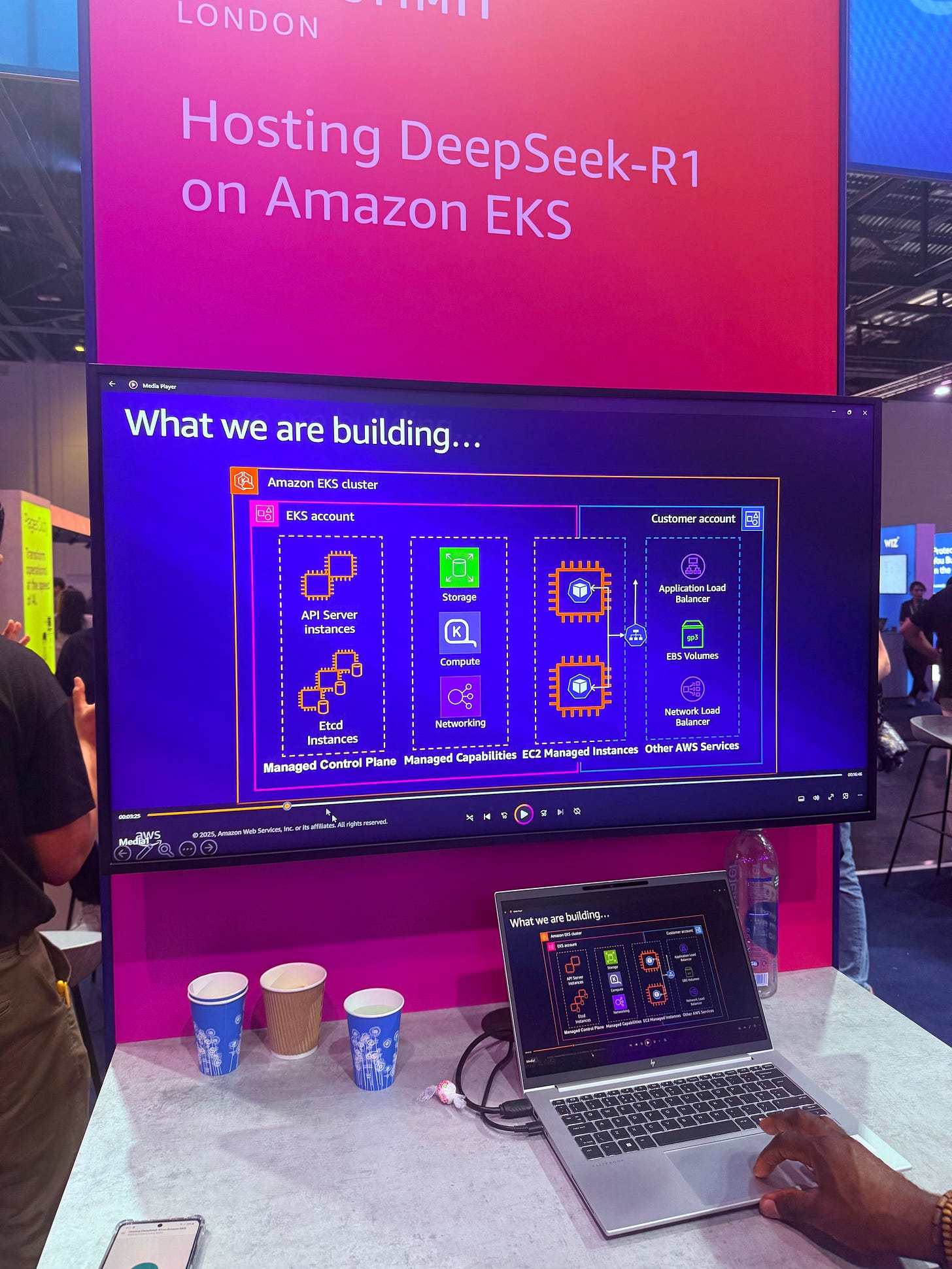

At the AWS Summit London, this booth is showing how they’ve deployed an AI model called DeepSeek-R1 using Amazon EKS (Elastic Kubernetes Service). EKS is basically a managed version of Kubernetes that AWS takes care of for you. It lets you run containers (like Docker apps) at scale without needing to manage all the complicated parts like networking, cluster upgrades, and security by yourself.

This architectural diagram explaining how DeepSeek-R1 is deployed on Amazon EKS (Elastic Kubernetes Service). Amazon EKS Cluster is the central Kubernetes control plane that manages your containerized application.

On the big screen, the architecture diagram also splits the responsibilities. AWS manages the control parts of the cluster (like the brain of Kubernetes), while you (the customer) manage things like your own load balancers, volumes for data, and the actual app you’re running.

In this case, DeepSeek-R1 (an AI language model similar to ChatGPT) is being run inside the EKS cluster. That means it’s running on containers, spread across powerful EC2 machines, possibly with GPUs and connected to AWS services like storage, networking, and security. This setup gives teams the ability to deploy advanced AI apps quickly and reliably, scaling up or down as needed and using the power of the cloud to handle big workloads without needing to build their own data centre.

Edge / Networking / Security Observability

It’s all about how AWS helps teams monitor and secure their infrastructure, especially when it’s distributed across cloud environments, edge locations and hybrid networks. The main focus here is AWS Security Lake. This is a service that helps you centralise and analyse all your security-related data in one place. It pulls logs and alerts from various sources like:

AWS CloudTrail: records API activity (who did what and when).

VPC Flow Logs: captures network traffic going in/out of your AWS environment.

DNS Logs, Security agents, GuardDuty: for threat detection and behaviour tracking.

All this data gets stored in Amazon Security Lake, which is built on top of Amazon S3 for long-term, cost-effective storage. It follows the “write once, read many” model, meaning the data can be reused by many tools without duplication.

Instead of manually gathering logs from different services and trying to piece them together, Security Lake automatically formats everything. That means security teams (InfoSec, SIEM tools, threat hunting apps, etc.) can run analytics, detect threats faster and automate responses.

Sports Zone Freekick Challenge

This shows how AWS services are used to track, analyse, and display live football match data such as ball movement, player positioning and statistics at the summit’s on-site pitch.

Let’s break it down simply into 2 sections, AWS services and real life input:

This starts with real-world input: Ball sensors, player tracking, cameras, and even NVIDIA Jetson devices (used for edge computing). These collect real-time stats like speed, location, ball possession and more.

This data gets passed into various AWS services:

AWS AppSync - manages real-time communication between front-end apps and backend. Amazon DynamoDB - stores game state, player stats, scores. AWS Lambda - runs backend logic like calculating leaderboards.

Amazon ECS - hosts microservices or containers for processing (e.g. game engine or match controller).

Amazon Bedrock - used here for generating live commentary using GenAI models!

Guardrail - ensures the generated commentary is safe, appropriate, and on-topic.

Amazon Cognito – handles player or user authentication for apps.

Attendee web app, operator station, scoreboard, leaderboard display which is all powered by this backend.

Feeds show live match commentary, stats, and visuals.

Amazon S3 + CloudFront, stores and delivers media/content quickly

AWS WAF - protects the apps from cyber threats.

Amazon SageMaker - trains ML models (e.g. ball tracking or player predictions).

This may look overwhelming but don’t worry, all of these services work together to provide a live football game powered by AI and AWS. Sensors and cameras feed real-time data into AWS services, which then analyses the game and updates a live scoreboard. Furthermore, it can generate commentary and stream it all securely through the cloud.

It’s a cool showcase of how AWS can bring together edge computing, AI/ML and cloud infrastructure for real-time sports tech.

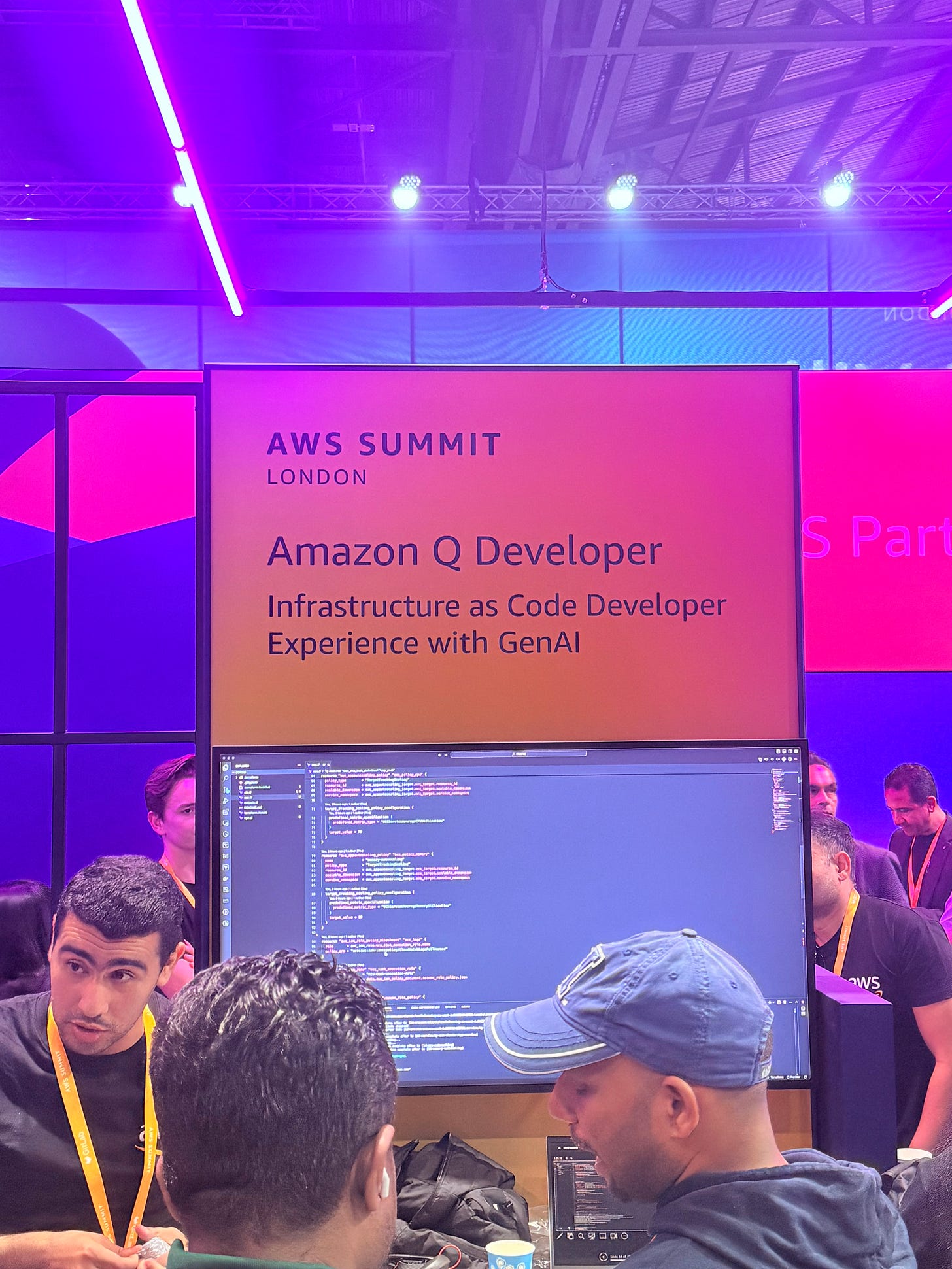

Amazon Q Developer

Amazon Q Developer is a GenAI-powered assistant for developers built to help you write, understand, and troubleshoot code, especially for Infrastructure as Code (IaC) like Terraform or AWS CloudFormation. It can explain what code is doing before you make changes.

Write Terraform/CloudFormation code and describe what you want in plain English. It writes the infrastructure code for you.

Some of the things Amazon Q developer can do:

Scan your code for errors or security issues: Very useful for spotting problems early.

Troubleshoot errors: If something breaks during deployment, Q can help fix it.

Chat about resources: You can ask, “What does this Lambda function do?” or “Why is this bucket public?”

Create pull requests & README files: It automates documentation and GitHub tasks to save time.

If you’re working with AWS infrastructure, Terraform, or DevOps pipelines, Amazon Q Developer is like having a super-smart assistant inside your IDE (like VS Code). It helps you work faster, reduce errors and learn on the go especially if you’re still growing your IaC or cloud skills.

Ask an Architect:

At the “Ask an Architect” station, I grabbed a few minutes with a Technical Account Manager who laid out exactly what he does day-to-day. Everything from reviewing customers’ cloud architectures and identifying cost or performance bottlenecks, to coordinating incident response when things go sideways at massive scale.

He walked me through real examples of how AWS steps in early to design resilient, secure systems that can handle millions of requests per minute, and how his team stays plugged into customers’ roadmaps to risk assessment before they hit production.

Hearing about the mix of hands-on troubleshooting, proactive guidance, and long-term strategy that goes into keeping some of the world’s biggest workloads online was eye-opening. It really drove home how AWS doesn’t just sell services, it partners deeply to solve hardcore engineering challenges and left me inspired about the impact you can make in a role like that. Very inspiring.

Networking:

Networking was the real highlight. In casual conversations, I got pointers on AWS tools I’d never tried and invites to join online Slack and Discord communities where experts and community members share real-world tips. I even connected with leading engineers who shared slide decks from their talks. Those quick face-to-face moments led to meetups invites and invites to deep-dive workshops. What starts as a one-day event snowballs into a support network that keeps you learning, troubleshooting and pushing your projects forward long after the summit ends.

Closing thoughts:

If you’re early in your tech career, AWS Summit London is crucial. You’ll get a front-row seat to the latest cloud and AI tools, pick up practical tips you won’t find in tutorials and meet AWS engineers to fellow peers who can help you level up. It’s a way to build your confidence and start networking with people who you can learn from or those that you can teach too. Plus, you’ll leave with clear next steps and resources to keep learning long after the day ends.

Don’t just read about cloud tech… get hands-on, make connections and kick-start your journey.

Don’t forget to grab the AWS Summit app before you go. It’s a lifesaver for planning your day, you can browse the full agenda, bookmark the sessions you actually care about, and get push reminders so you don’t miss your favourite talk. The built-in venue map shows you the exact location of each room and demo booth. It’ll even nudge you when a session you bookmarked is about to start, so you can spend less time juggling paper schedules.

See you at the AWS Summit London 2026!